System breakdown

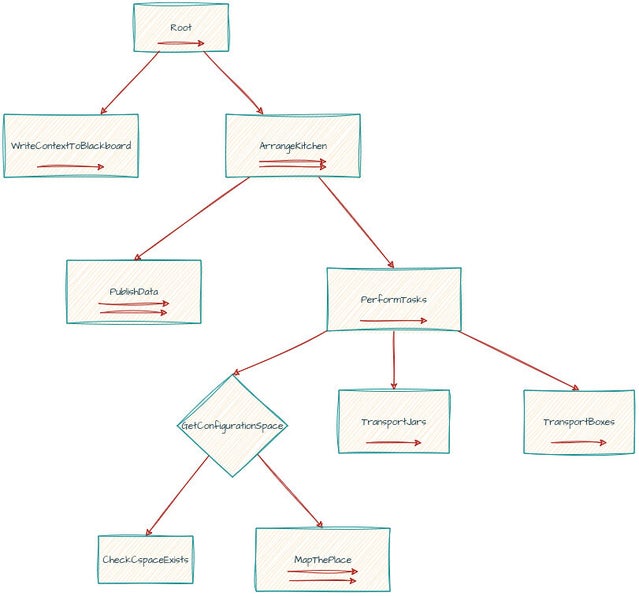

Here's how the behavior tree looks at the highest level of abstraction to achieve the solution:

- At the very beginning, we write all the parameters needed to run all behaviors, like the robot handles, points of interest, tunable parameters that depend on the overall context, etc.

- Next we fill in with all behaviors that publish the real-time data required by other behaviors. This could be the pose of the robot. This limits the data of the robot to be updated at the frequency at which the behavior tree is ticked, but I can live with this for now. We can add behaviors here as needed.

- This is mainly where it differs from previous implementations, I am trying to keep the behaviors away from doing the communications with device handles and instead use the device handles to share their data on the blackboard. If this was a ROS Implementation, we would simply initialize nodes which would publish the data in the background.

- Next, we go to a Selector Node which first checks if the Configuration Space exists and if not, it proceeds to the mapping behavior. In this behavior, we go around the table in the kitchen and map every nook and corner to create the c-space.

- We then transport the jars from the kitchen top to the table.

- Next, we transport the cereal boxes from the kitchen top to the table. The reason this is a separate abstraction is because as mentioned in the problem statement, these boxes require some non-prehensile manipulation i.e, moving them into a pose which allows us to generate a grasp pose within the robot's workspace. Bunching it together with the behaviors south of the TransportJars behavior may make that subtree unnecessarily complicated.

System Charecteristics

- This behavior tree will be implemented in py_trees. This does put the system at a limitation; this library doesn't provide truly parallel nodes, in the sense that they do not run on separate threads. Instead, the parallelism here is the fact that the behaviors under the Parallel composite are ticked in sequence every time the behavior tree is ticked from the root down.

- Although this does allow for some pseudo-parallelism, its not true parallelism in the sense that if 2 behaviors need to be synced up simultaneously, that can't be done. For instance, in my past project on garment automation, I needed to make the robot execute a trajectory and run the sewing machine. The trajectory execution was a blocking behavior and I couldn't really execute them one after the other.

- Having said that, this library does discourage heavy or long blocking behavior.

- I can only accomplish this if the actual work is being done in a different process (such as a ROS Node) and the behavior merely receives the status of the work through ROS interfaces.

- I like this idea, but for the purposes of this assignment, I am going to keep things simple. Once I am through with it, I might port over the code to a ROS based architecture when I work on more complex versions of this solution that either tackle harder problems or make the setup more realistic i,e, the localization is not done via the cheat sensors of GPS & compass or that I actually use a realistic perception module.

- As mentioned in the earlier point, ROS will not be used. However, I will shift the solution to ROS once I am through with this assignment. I have run into several instances where I could really benefit one or more of the following:

- Visualization, especially of frames, in RViz.

- TF library for forward kinematics.

- Setting up dedicated ROS Nodes that allow us to not put heavy execution code in the behaviors.

- Other rich set of libraries that can be offloaded with some of the work like Nav2.

- Python / C++. Although I would prefer to code in Python to move things along quickly, some of the code is really slowing things down, like the mapping code in particular. I am however constrained to writing only the libraries that are not dependent on webots in C++ and providing python bindings for it. However, my strategy is going to be to try with python code first, and if things like code optimization, parallelizing the code execution using libraries like cupy, etc doesn't work; then I go for the nuclear option of porting over the code to C++ and writing python bindings.

- Minimize installation of external non-standard libraries. This is merely for the submission for the assignment, which forces me to keep things simple. I could submit it as a containerized solution where the evaluator can run the solution in a container based off an image that must be built, but the evaluators are other peers, and I have received an F on one of the assignments as I was using something like environment variables to append some paths to sys and this probably didn't work when some peers ran the solution on Windows instead of Linux. Other ways of doing this is to use poetry to install dependencies from pip, but nah.

- The only way I can use a non-standard library like py_trees for instance is to provide an instruction to install the library before running the solution.

Code Architecture

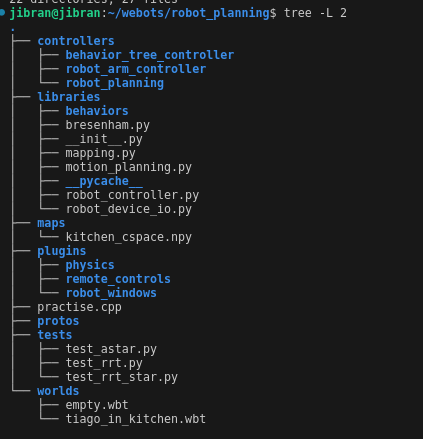

So far, the codebase looks like this. This is the convention recommended by webots. There are only 2 directories of interest here :

- The controllers directory has all the different controllers, these run whenever the simulation is run, and only one controller can be run for a given simulation runtime instance.

- The libraries obviously has libraries filled with code I wrote to implement:

- Different algorithms and re-usable behaviors like path planning and navigation.

- Libraries that make calls to some Webots APIs. For instance the robot_device_io encapsulates all calls to initialize, set and get values from various devices that are an integral part of the robot (although I did violate this by putting GPS and Compass in it), but sensors like the range finder were not a part of this class.

- Behaviors that can be re-used for different behavior trees. Like planning a path or navigating across waypoints, etc.

- The only thing I can think of that needs re-wiring is moving out any devices that are not an integral part of the robot out but other devices like encoders, robot joints, etc can stay.

- I implement an abstraction to get an SE2 pose from the same class that handles device communication. This is a violation of the Single Responsibility Principle. I do not wish to unnecessarily change the class when I decide to, for instance use encoders instead of GPS or maybe use polymorphism.

- However, I do have to make sure that in creating these different classes that do access all devices via one common robot handle, I do not get into a race condition. So, no 2 calls to the robot handle are made at the same time. This is guaranteed due to the single-thread execution of this behavior tree library.

Behaviors

- Mapping