NASA-Space Robotics Challenge

Prelude

Github Respository : https://github.com/WPI-NASA-SRC-P2/capricorn_competition_round

The Space Robotics Challenge was organized to let teams compete to develop solutions to excavate the moon's surface for natural resources (volatiles). It consisted of 2 phases - Phase I was completed in 2017 and Phase II consisted of 2 rounds - qualification and competition. My university - Worcester Polytechnic Institute qualified for the competition round, one of the 22 teams making it from among the 114 contenders. The qualification round involved performing a series of well-defined tasks.

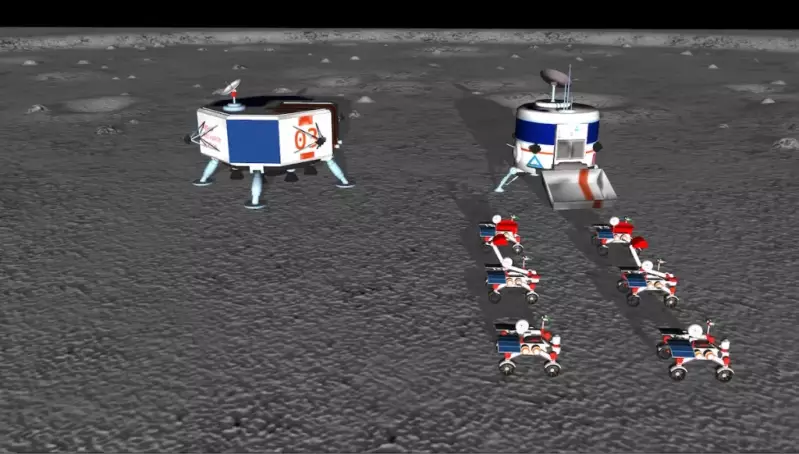

This was of course, all in a Gazebo simulation provided by the organizers. The competition round was one big task - to excavate as many volatiles as possible from the moon's surface - filled with craters. They provided us a maximum of 6 robots that we could use - 2 for scouting for volatiles, 2 for excavating them out and 2 for hauling them to the landers (stations). The teams would be ranked according to the amount of volatiles they excavated (different volatiles scored different points, due to their value and rarity).

That's when I joined the team - for the competition round.

Solution

We had several sub-teams : Perception, Mapping, Planning, Navigation, Localization and Overall Management (this is a name I made up). Of course, anyone could contribute wherever they could make an impact.

- The perception stack was a trained model that could detect known objects, and using the stereo camera mounted on the robots, localize those known objects. This was other robots, craters, rocks, stations, etc.

- The mapping stack would decompose these objects into a 2d grid around the object, also known as a configuration space. This is where I started out.

- The planning and navigation stacks would plan and execute the robots' trajectories to destinations, avoiding any obstacles along the way.

- The localization stack would give the robot's location with the required accuracy and precision. The scout would communicate its location to a manager, and the manager would give this location to the hauler and excavator; these robots would arrive at the spot, dig up the volatiles and the hauler would return to the station to dump them.

- A manager was necessary to coordinate all this. We used state machines (link to directory provided, for anyone curious to have a look at the code) as a core component to enter the robots into one of many finite number of states to enter, execute and exit tasks and transition into the next task or trigger a recovery task. I was heavily involved with this once I finished working on mapping.

My role

Broadly speaking, these were my roles (happy to talk more about them) :

- Mapping, I developed code to map the obstacles into the local map.

- Localization. I developed the prediction models for the sensor fusion library which involved developing the kinematic models. I also used the IMU and the perception output(s) to feed into the observations.

- Our localization, however, fell short of the requirements and there was significant odometry drift. I then developed an odometry resetter which took advantage of the fact that the stations were still visible to the robots as long as they didn't end up in a crater and the stereo camera still performed optimally at these distances. This improved our solution significantly getting us a lot more points.

- State machines, I wrote all the micro-state machines, which had context of an individual robot to perform the different tasks while working with Ashay who developed the "macro" state machines which had context of a set of robots all the way to the manager which had complete context.

- Troubleshooting. Since I developed the state machines and tested them, I would be exposed to all the problems with all the stacks. I would troubleshoot them, or assist in troubleshooting with the owner of the task.

Results

We were averaging 30-40 points by the time we submitted our solution. But unfortunately, when the results came back, we had averaged 9 points. I do think we spared no effort though, and the experience was the reward! Here's a video of our performance :

Acknowledgements

Goes without saying, this was a massive effort!

- The team who pulled through and were a pleasure to work with!

- Ashay, the team lead who managed and guided the team through all the phases and towards the final round!

- Our academic advisors - Professor Michael Gennert and Professor Carlo Pinciroli who gave us technical and financial support. Couldn't have done this without them!